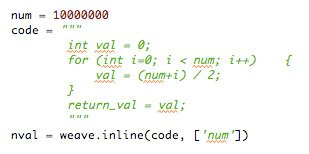

Fig 1.1: Screen captures of orange and apple shaders

For an OpenGL project I am helping fellow NYU-ITP graduate student Matt Parker with; I developed two real-time shaders written in GLSL to be applied to poly-spheres representing two types of fruit: apples and oranges. Due to the sheer number of spheres being displayed, the object on my end was to get a high-amount of surface detail using a limited amount of polygons. To accomplish this, I used a combination of displacement and normal mapping calculation in the shader, meaning that all surface detail is accomplished through the GPU.

I have already written about how displacement is done through shaders - the very same technique I used in the past was also used in this set of shaders:

Geometry Displacement through GLSL

Color variance along the geometric surface in regard to light has changed apart from my past shader work, where I have written in ambient and specular calculations through accessing the light (L) and given surface material (M), where:

Diffuse:

Ambient:

Specular (where H is the given half-vector):

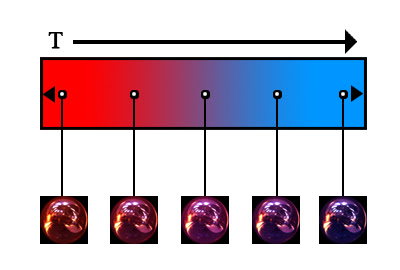

To simulate the surface shape variance needed to pull off the appearance of an orange, a popular technique called "bump-mapping" was used. Bump-mapping is generally accomplished by having a normal-map which is sampled as XYZ vectors which are supplemented across the geo-normal matrix that the shader is applied to. The Lambert term for the normal is calcuated, which is simply the dot product of the normal and the light direction. If the lambert term is above 0.0, calcuate specular and add to the final color for the fragment. Here is some sample code to illustrate:

vec3 N = texture2D(normalMap, gl_TexCoord[0].st).rgb *The finished shader encompasses texturing, displacement, and normal mapping, resulting in a nice aesthetic simulating a complex surface with light calculations matching the surface variance. All of this occurring on a low-poly (16 x 16) quadric sphere object. Rotation animation simulated in real-time of the orange shader below:

2.0 - 1.0;

float lambert_term = max(dot(N,L),0.0);

if (lambert_term > 0.0) {

// Compute specular

vec3 E = normalize(eyeVec);

vec3 R = reflect(-L, N);

float specular = pow(max(dot(R, E), 0.0),

gl_FrontMaterial.shininess);

}

Fig 1.2: Orange shader rotation animation

Sources for general information/reference and equation images:

ozone3D.net

Lighthouse3D.com

OpenGL.org