There are several ways to get Python moving faster - some a bit more ugly than others; code speedups with Python are usually about trading in readability for performance, but that doesn't mean it can't be straight-forward in implementation. In the past, I have used the usual bag of Python tricks: list comprehensions, method/variable assignment outside loops, generators, and countless more pure-Python solutions.

Recently, I stumbled upon Weave, a module existing inside the popular SciPy library which allows for C code to be mixed with Python code. Using Weave allows for the coder to stay inside the bounds of Python, yet use to power of C to compute the heavier algorithms. Not only does it provide a speed increase... it beats Java. Consider this (nonsensical) algorithm comparison between pure Python, Java, and Weave + Python:

Python Example:

Time: 1.681 sec.

Java Example:

Time: 0.037 sec.

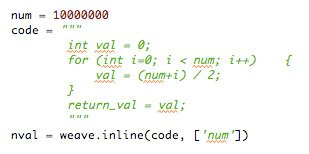

Python + Weave Example:

Time: 0.017 sec.

Using identical algorithms in all three tests; using Weave with Python was nearly 100 times faster (98.9x) than using pure Python alone. In comparison to Java, Weave + Python was a shade over 2 times faster (2.1x) than Java. Although the Python/Weave code itself is bigger than the pure Python and Java examples - the speed it provides is absolutely phenomenal, without ever leaving your .py file.

Hopefully, I'll be able to use the power of mixing C with Python for more elaborate purposes - but this test alone is enough to get me excited about future projects with Python.