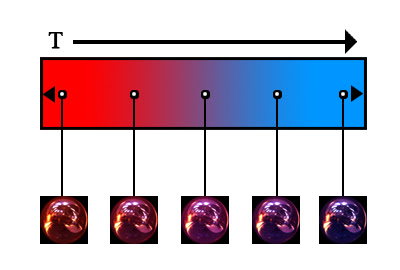

I took the route of writing an algorithm which finds and stores the most dominate colors in an image - then using each sample as a control point within a varying length linearly-smoothed gradient, where the most dominate colors are in order from left to right. Below are some examples of the results, where the top is the source that was sampled and gradient is the output visualized:

Fig 1.1: Sky converted to a gradient based on dominate colors

Fig 1.2: Composition VI, W. Kandinsky - image to gradient conversion

Fig 1.3: Composition VII, W. Kandinsky - image to gradient conversion

Now that a method existed to translate static images into gradients, it becomes a trivial task to write a translator for a video feed, as video can be easily treated as a series of images. Simple alterations to a subsystem can allow for a calculated sample of each frame in a gradient. So, if a video feed were parsed once every hour for twenty four hours, a gradient could be constructed of n-length, linearly being smoothed evenly across each respective control point based on n+1 distance.